In disaster zones—collapsed buildings, smoke-filled tunnels, tunnels flooded with debris—visibility is often the first thing to disappear. For unmanned ground vehicles (UGVs) tasked with search and rescue (SAR), this is more than a mere inconvenience: it is a primary technological barrier. Cameras become blinded, LiDAR beams scatter, and human operators lose confidence in what the robot “sees.” In these extreme conditions, one sensing modality consistently cuts through the chaos: radar.

Through the CARMA project, radar is emerging as the backbone of robust autonomy. It offers a sensing layer that performs reliably where all others fail, enabling UGVs to navigate, map, and localize even in the harshest environments. Here’s why radar is transforming the way robots operate when lives are at stake.

Why Radar is the Ultimate SAR Sensor?

Disaster environments are unpredictable. Dust clouds from collapsed concrete, heavy rain, snow, smoke, steam, and low light are common. While optical sensors excel in clean, controlled domains, they break down fast in real-world chaos. Radar, however, remains almost entirely unaffected by:

- Obscurants such as dust, fog, smoke, and snow.

- Low or no-light conditions.

- Reflective or absorbing surfaces like wet concrete or twisted metal.

- Harsh weather and temperature shifts.

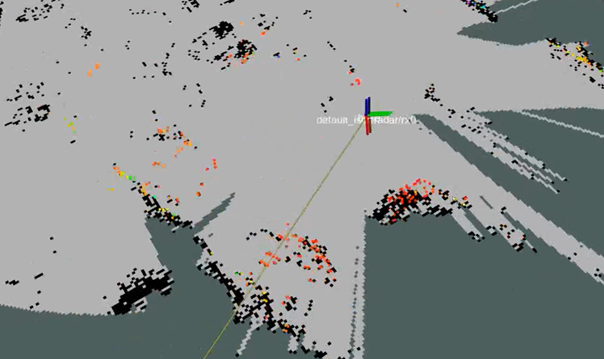

Modern high-definition 4D radars deliver dense point clouds with velocity, elevation, and angular resolution previously impossible with traditional systems. This enables reliable detection of obstacles, moving objects, and structural features in degraded visual environments—precisely the conditions SAR robots must operate in.

Localization when GNSS and Vision Fail

Collapsed infrastructure and underground rescue scenarios are GPS-denied by default. Visual SLAM often fails due to repetitive structures, dust, and low texture. LiDAR SLAM can drift significantly when surfaces are uniform, reflective, or filled with particulate matter.

Radar-based localization, in contrast, thrives in these environments. By extracting stable radar features—edges, corners, reflective structures—and combining them with inertial and wheel odometry, a UGV can build a persistent, drift-resistant map. The CARMA system fuses:

- HD 2D scanning radar point clouds

- 4D radars for near-field coverage

- IMU for high-rate motion updates

- Wheel odometry

The result is a UGV that can autonomously explore, localize, and re-localize even in chaotic, cluttered indoor/outdoor transitions.

Perception Resilient Enough for Life-Saving Missions

Radar is more than a fallback sensor—it’s a perception cornerstone. For SAR operations, radar supports:

- Obstacle Detection in Dense Clutter: Radars penetrate debris and detect objects even when partially obscured, allowing UGVs to navigate tight voids without relying on clean line-of-sight.

- Motion Detection Through Occlusions : Micro-Doppler signatures can reveal movement behind smoke or light obstacles—critical for locating survivors or detecting shifting structures.

- Structural Assessment: High-resolution 4D radars can detect subtle motions in walls, floors, or ceilings, providing early warnings of secondary collapses.

- Robust Path Planning: Combined with radar-based elevation mapping, robots can traverse unstable terrain with fewer assumptions about lighting or surface features.

A Multi-Sensor Blueprint: Radar at the Core

The strongest SAR UGVs use radar as the foundation and fuse additional sensors on top:

- Stereo/Thermal Cameras: Provide semantic understanding when usable.

- LiDAR: Adds fine geometric detail when conditions allow.

This “radar-first autonomy stack” ensures the robot can always fall back to a reliable perception layer no matter how extreme the conditions become.

A New Standard for Resilient Autonomy

In search and rescue, robustness isn’t optional—it is the entire mission. Radar gives UGVs a superpower: the ability to operate where humans cannot see, where traditional robotics fails, and where environmental chaos is the rule rather than the exception.

As autonomy advances, radar will increasingly define the core of next-generation SAR robots—machines capable of pushing deeper into disaster zones, mapping the unknown, and ultimately saving lives.